The RSA Conference 2022 – one of the world’s premier IT security conferences – was held June 6th-9th in San Francisco. The first in-person event for RSA since the global pandemic had a slightly lower turnout than in years past (26,000 compared to 36,000 attendees). But attendees and presenters alike made up for it with their eagerness to explore emerging IT security trends that have developed over the past year – a venue like RSA Conference 2022 delivered on tenfold.

Following the remote work pivot we saw in 2020, IT security has had to evolve quickly to remain effective, flexible and resilient in today’s dynamic hybrid/remote work environments. This year’s RSA Conference and the upcoming Black Hat USA 2022 in August are providing vital venues for IT security pros and business leaders to address challenges in today’s rapidly evolving security landscape.

Here are some of the key trends which we observed at this year’s first marquee cybersecurity event post-pandemic:

1. Market landscape for XDR grows more crowded

RSAC was abuzz with numerous security providers – large vendors and small start-ups alike – promoting capabilities and options offering new flavors of EDR and MDR. Based on the customer and analyst interactions, it was evident that the definition of XDR is still evolving, and that customers are still trying to determine what is the best solution for their specific use case.

Most customers alluded to the cybersecurity skills shortage; one of the key market drivers remains a “managed” component tailored to organizations’ response capabilities. As the sophistication of malicious actors is growing rapidly, fundamentals such as initial compromise detection and lateral movement prevention still seem to define customers’ preferences.

2. Threat intelligence becomes key to addressing workforce gap

With new threats emerging daily, the industrywide shortage of skilled professionals is placing additional stress on security teams. Threat intelligence solutions using AI/ ML technologies can prevent false positives and reduce alert fatigue – helping cybersecurity professionals focus on strategic priorities instead of spending all their time reacting to security alerts and potential incidents.

We have seen this trend building over the years as increasing numbers of security appliance vendors have come to rely on our BrightCloud® Threat Intelligence for its accuracy, depth and contextual intelligence in order to stay a step ahead of a rapidly evolving threat landscape.

3. Cyber insurance becomes mainstream discussion

As cyberattacks have become more costly and more challenging to track, cyber insurance has gained prominence across the industry. Unfortunately, as cyber risks mount, insurers are raising prices for coverage, requiring customers to answer lengthy questionnaires and limiting who they provide cyber insurance coverage to.

The cyber insurance market is expected to reach around $20B by 2025. However, as MSPs and customers look to cyber insurance to manage their risk exposure, more emphasis is expected on the fine print of the coverage – in particular, on exclusions and limits around brand reputation and restoring normal operations.[NL1]

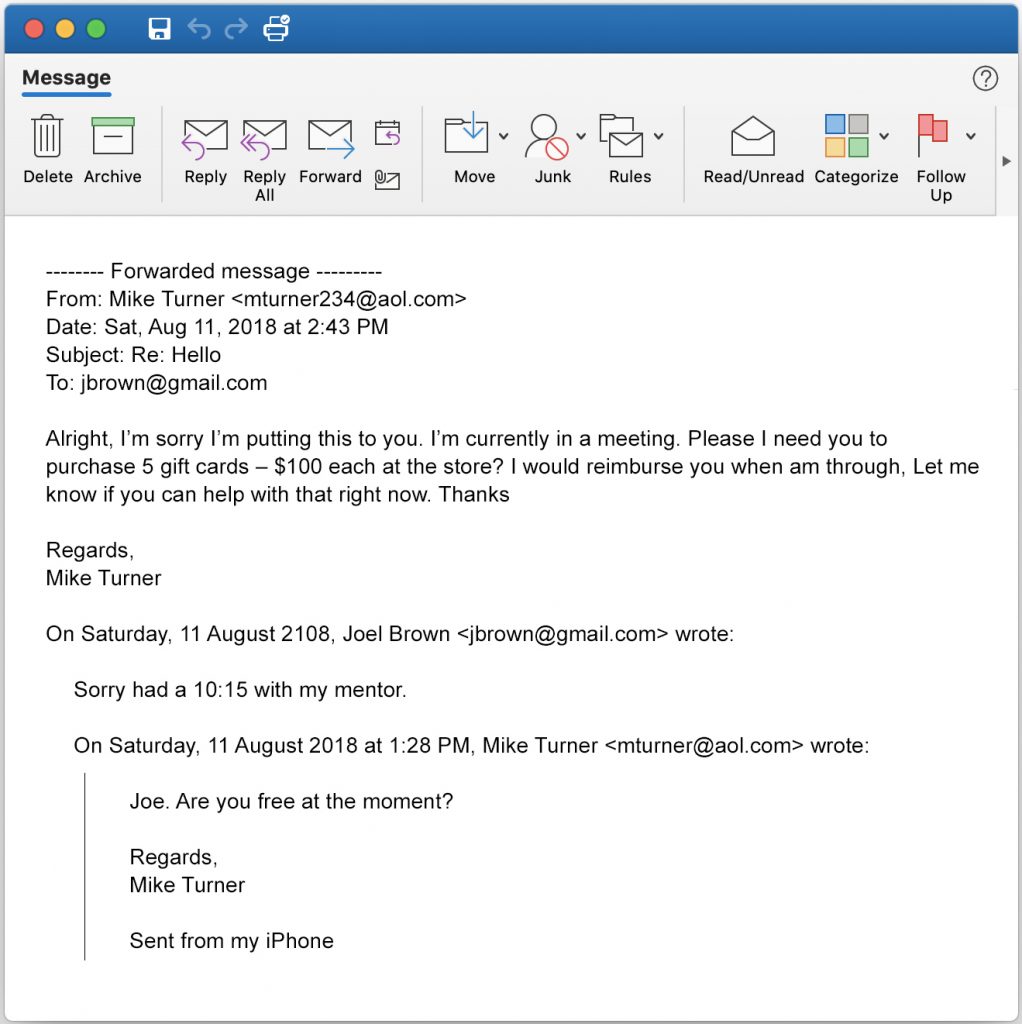

4. Business Email Compromise gains prominence

Although there is a mounting body of evidence that shows ransomware is and will continue to be a concern for businesses, there’s also an argument to be made for an eventual slowdown in ransomware attacks. As discussed at this year’s RSA conference, many preventative measures such as law enforcement crackdowns, tighter cryptocurrency regulations and ransomware-as-a-service (RaaS) operator shutdowns are putting pressure on ransomware perpetrators.

Phishing has now become the most popular avenue of attack for hackers because it’s relatively easy to trick people into clicking on malicious links. 96% of phishing attacks are sent via email – and 74% of US businesses have fallen victim to phishing attacks. This is what prompted the FBI to issue a warning about the $43B impact of Business Email Compromise (BEC) scams.

5. Cyber Resilience planning puts focus on recovery readiness

The growth in digital attack surfaces has added a new dimension to traditional data protection approaches in terms of compliance with emerging regulations. This theme was validated in the day-two keynote, where panelists reiterated the importance of data protection and governance in the context of privacy.

This year, ransomware events have increased by more than 10%, and the average cost of a data breach to organizations has risen to $4.2 million. Customers are increasingly taking steps to protect their data, with an emphasis on recovery and minimizing downtime. This growing focus on becoming cyber resilient is a wise course of action in a threat landscape in which malicious actors only need to get lucky once!